Decentralised artificial intelligence is being hailed as one of the most transformative developments of the modern era. Its promise lies in shifting the control of AI from a handful of major technology corporations to a globally distributed network of developers, researchers, and enthusiasts. By giving more individuals the ability to contribute to and interact with powerful AI systems, decentralised AI aims to democratize access, foster innovation, and make intelligent technologies more transparent and inclusive. Yet, while its vision is compelling, achieving it requires overcoming substantial technical, operational, and ethical challenges.

The Vision of a Democratised AI Landscape

Today, the most advanced AI models are dominated by a few well-known players, including OpenAI, Google, Microsoft, Anthropic, and DeepSeek. This concentration mirrors the early internet’s tendency toward centralisation, leaving a small number of entities with outsized influence over access, capabilities, and the pace of innovation. Decentralised AI seeks to break this pattern by creating an ecosystem where knowledge, data, and computing resources are shared openly, allowing global participation.

The potential benefits of this approach are considerable. Individual developers, startups, students, and hobbyists could all contribute to AI development, collectively driving what many envision as “democratised innovation.” Transparency is another key advantage: open AI models running on distributed networks, potentially reinforced by blockchain technology, can make it easier to detect bias, toxic behavior, or other undesirable outputs. By contrast, centralised models often operate as opaque “black boxes,” leaving users in the dark about decision-making processes or potential ethical pitfalls.

Decentralised AI also promises greater resistance to censorship and improved accessibility. Large corporations often embed content restrictions in their models and charge for usage, limiting access to those who can afford it. In a decentralised system, open and community-governed models would be inherently more accessible, allowing broader participation and potentially bypassing restrictive content filters. This openness could foster a more inclusive AI ecosystem where innovation is driven by collective contribution rather than financial capability.

Technical Hurdles and Computational Challenges

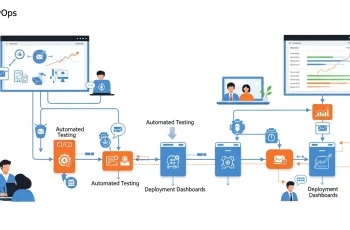

Despite its promise, decentralised AI faces a range of complex technical obstacles. Data integrity and synchronisation are particularly challenging. Techniques such as federated learning can help coordinate model training across multiple nodes, but they do not fully mitigate the risks of data poisoning or manipulation, which could skew results and compromise model reliability. Adding a blockchain layer might improve transparency, but it also introduces complexity that can slow data processing and hinder innovation.

Efficiency is another critical concern. Distributed networks, while potentially reducing costs and bias, may struggle to match the computational performance of centralised systems. Advanced AI models require access to vast arrays of GPUs and highly optimised infrastructure. Coordinating these resources across a dispersed network is a formidable logistical challenge, particularly when training sophisticated models on limited or heterogeneous hardware.

Some solutions are emerging. For example, frameworks that divide model training into smaller tasks distributed across multiple nodes allow decentralised networks to parallelize computation and synchronise results efficiently. Such approaches could enable smaller teams or individuals to train highly capable models without requiring access to expensive, high-speed data centers. By optimising network efficiency and resource allocation, decentralised AI can become more feasible even on modest hardware and bandwidth.

Security and Ethical Considerations

Security is a pressing concern in decentralised AI. While decentralisation reduces the risk of a single point of failure, it simultaneously increases the attack surface, exposing the system to potential threats across countless endpoints. Unlike centralised AI, where infrastructure and access can be strictly monitored, distributed networks must defend against malicious actors who could manipulate data, compromise models, or exploit vulnerabilities.

Ethical governance poses an equally significant challenge. In decentralised systems, questions of accountability arise: who decides what aspects of a model are updated, what guardrails are implemented, and who is responsible if errors or harm occur? Without clear governance structures, there is a risk of creating an “ethical vacuum,” where powerful AI tools could be misused without recourse. Hybrid models, combining AI’s computational power with human oversight, are one proposed solution, allowing collective input while ensuring that human judgment guides critical decisions.

Balancing Opportunity with Risk

The potential of decentralised AI is enormous. By enabling global collaboration, fostering transparency, and reducing barriers to participation, it could redefine how AI is developed, shared, and applied. Advocates envision a world where innovation is driven not by a few dominant corporations but by a globally distributed community, opening opportunities for individuals and small organisations to contribute to and benefit from AI technology.

However, the path forward is fraught with challenges. Data integrity, network efficiency, security, and governance remain significant hurdles. Without robust frameworks and strategic oversight, decentralised AI could struggle with inefficiency, ethical lapses, and vulnerability to misuse. Careful design, technological innovation, and transparent governance mechanisms are essential to prevent these risks from undermining the technology’s promise.

Ultimately, the future of decentralised AI depends on finding the right balance: harnessing the benefits of collective development and open participation while implementing safeguards to ensure security, accountability, and ethical integrity. If successful, it could democratise access to intelligent technologies and create a more equitable, collaborative AI ecosystem. But without careful attention to the challenges, decentralised AI could also amplify existing risks, highlighting the need for cautious optimism as the field advances.